The Influence Model

Welcome back to The Influence Model, your weekly roundup of how AI is reshaping influence and policy persuasion in Washington. Let’s get right into it.

The Rundown

The Signals: Five signals showing AI’s growing sophistication in predicting and shaping human behavior

In the Wild: Russian bot networks, AI in Parliament, AI influencer ads, and a deepfake lawsuit

From the Trenches: Interview with Colin Raby

The Signals This Week

1. Wikipedia Becomes a Renewed Narrative Battleground

Coordinated editing campaigns target AI training sources

Narrative threats are now emanating from Wikipedia. A coordinated group of 40 Wikipedia editors successfully altered the Wikipedia page on Zionism, introducing biased content that through their coordinated editing practices that locked the page from edits for a year.

This matters more now than ever because humans are no longer Wikipedia’s most important readers. The encyclopedia serves as a critical search source for AI models like ChatGPT, Gemini and Claude. As AI becomes the mediator between people and information, this cautionary case study show the way adversaries are increasingly targeting the sources that shape AI responses.

Something to think about: For public affairs professionals defending clients, causes, or industries, keeping tabs on Wikipedia and other “sources of truth” is essential narrative infrastructure work. The same coordination tactics used maliciously can be applied proactively to ensure fair and accurate representation.

2. AI “Hallucinations” May Finally Be Solved

New OpenAI research reveals the surprising cause of made-up information

Made-up citations and false information in AI responses are a communications professional’s worst nightmare. New OpenAI research suggests that the problem isn’t inherent to the technology as previously thought. Instead, it’s a a training issue.

Researchers found that over-incentivizing AI to be a “helpful assistant” actually leads models to fabricate facts instead of admitting uncertainty. When researchers punished models for overconfidence, false or misleading answers dropped significantly.

Something to think about: The findings about incentive structures are not surprising if you’ve ever managed interns. The same principle applies: reward measured confidence over false certainty. Even by instructing AI to cite sources and admit when information isn’t available, you can greatly improve the quality of response.

3. MIT Develops “Digital Focus Groups” That Actually Work

New AI social simulation method significantly outperforms traditional models

Maybe you’ve found yourself asking AI to behave like a constituent or give feedback like your boss. I know I have. While AI is pretty helpful in pretending to be a certain kind of person, out-of-the-box models struggle to reliably predict human behavior at scale due to overfitting—they mimic one scenario well but fail in new ones.

Now MIT researchers and have published a new paper showing a method for creating AI agents that accurately represent real people. Their approach grounds AI instructions in established social science theories and validates performance across multiple scenarios to ensure agents learn flexible, human-like principles.

The results are compelling: In a study involving 883,320 novel games, these “general agents” far outperformed both standard AI and traditional game-theoretic models at predicting real human choices.

Something to think about: Just because AI can pretend to be a single human doesn’t mean it can actually simulate what people will do. Individuals are complex and that complexity is only magnified at societal scale. This research sheds light on how we might create digital humans that actually behave like real audiences, which could unlock predicting how people will react to new policies, messages, approaches, pieces of content and entire campaigns.

4. China Challenges Google’s Image Generation Dominance

Seedream 4 delivers higher quality at lower prices than Nano-Banana

Is the Banana splitting? Sorry—had to. Following Google’s viral Nano-Banana launch—which enabled Photoshop-like image manipulation and style transfer—Bytedance has released Seedream 4. The model produces higher quality image generations at even lower prices than Google’s offering, making commercial applications increasingly feasible.

Something to think about: As image manipulation becomes commoditized, the barrier to professional-quality visual content creation disappears entirely. This levels the playing field between well-funded campaigns and grassroots efforts, while raising expectations for speed and quality across all communications.

5. AI Influence Operations Reach Industrial Scale

Russian bot networks demonstrate the new economics of narrative warfare

If it smells like a bot, it probably is. A Peak Metrics analysis highlighted in Axios revealed a sophisticated pro-Russian bot network operating across social media platforms. The bots went undercover as the audience they were trying to influence, posting in French to appear native, demonstrating the operational sophistication now possible with generative AI.

The key insight from their analysis: “Generative AI has made influence operations nearly cost-free. It doesn’t need perfect arguments—just high volumes of content that blend into everyday social media noise.”

Something to think about: These campaigns succeed through “sheer scale, speed, and saturation,” making fringe ideas appear mainstream faster than ever. The same techniques available to adversaries are equally available to legitimate advocates who can move quickly. Prioritizing perfection over speed in the arena of public opinion may be a losing game.

In the Wild

Real-time examples of AI reshaping political communications and influence operations.

AI Speechwriting Hits the House of Commons

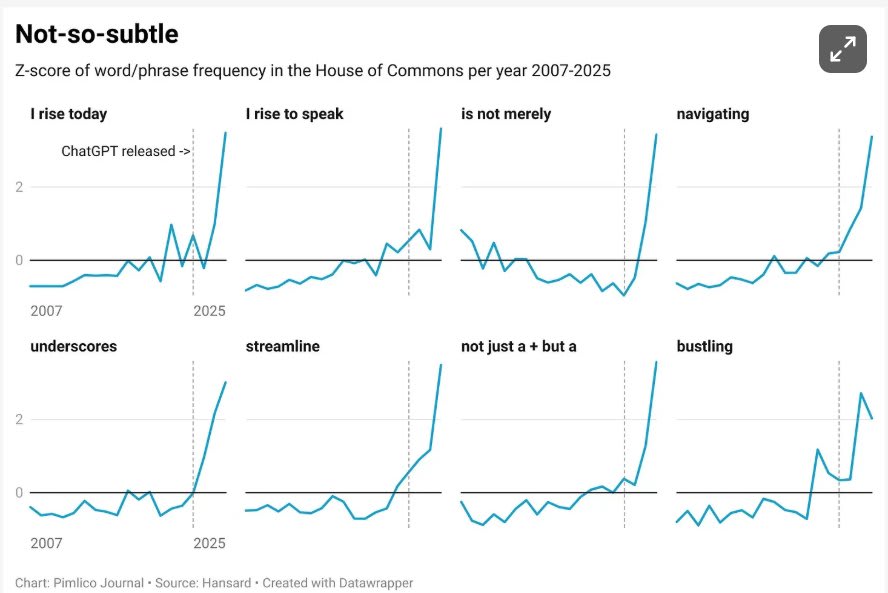

Data analysis of Britain’s House of Commons reveals a surge in AI-characteristic phrases since ChatGPT’s release. Terms like “I rise today,” “streamline,” “underscores,” and “not just a + but a” have spiked dramatically in parliamentary speeches, suggesting widespread adoption of AI writing tools among MPs.

Conservative MP Tom Tugendhat called out the trend directly: “This place has become absurd.”

The House of Commons data shows what happens when legislative staff treat AI like a shortcut rather than as a tool to enhance their own editorial judgment. There are many ways to use AI to sound like a human—but lazy use makes your boss or client sound like an everyday robot.

Public Voice Doesn’t Mean Fair Use

North Carolina State Senator DeAndrea Salvador discovered her likeness in a Whirlpool appliance ad in El Salvador—using words she never spoke. She’s now suing Omnicom and Whirlpool for copyright infringement, arguing that altering her public TED talk violates her rights even when the original speech was publicly available.

AI Influencers Hit Mainstream Advertising

Vodafone piloted an AI-generated influencer on TikTok to promote services in Germany. While savvy users spotted telltale signs of artificial generation, the campaign demonstrates how quickly AI personas are moving from experimental to mainstream advertising strategies.

From the Trenches

Conversations with AI pioneers and professionals inside the Beltway.

Bringing AI Into Congress: A Culture Shift From the Inside Out

When arrived on Capitol Hill as a “congressional AI specialist,” almost no one in his office touched ChatGPT. By the time he left, two-thirds of the staff used it daily. That didn’t happen because of a slick demo or a moonshot automation, despite his strong technical chops. It happened because he treated adoption as a cultural project as much as a technical one.

“Nobody bats an eye if you have Outlook open. But if you had ChatGPT on your screen, people thought you were cheating.” — Colin Raby

His method was simple but powerful:

-

Hunt the paperclip problems. Find small, repetitive tasks (press clips, calendar notes, flight memos) and solve those first.

-

Show, don’t tell. Prototype a quick solution, let staffers click it and watch skepticism flip to curiosity.

-

Normalize the tool. Keep ChatGPT open alongside email to de-stigmatize its presence (it’s not cheating!)

-

Follow up like a product manager. Ask again in a week. Ask again after that. Be persistent until AI sticks.

Raby frames the stakes in stark terms: “AI is to politics what the printing press was to democracy. It can broaden participation, but it can also concentrate power in the hands of those who know how to wield it.” His larger point is that AI gives people leverage to do more with less than ever before—which means AI is a tool of power.

The future will be wild, Raby discussed an increasing arms race of societies and campaigns simulated at scale on silicon before they ever reach the real world. All of it will be enabled by models that transformed language—the currency of meaning, communication and thought—into math.

It’s clear that AI adoption is never purely technical. It succeeds only when the culture of a workplace shifts enough that the tools feel as ordinary as email.

Thanks for reading this edition of The Influence Model. We’re just getting started and want to get these ideas into the hands of people who can use them. If someone comes to mind, please pass this along.

Reply and let me know what you think about this week’s signals.

Take care,

Ben

Leave a Reply

Want to join the discussion?Feel free to contribute!