Dialogue at Scale Beats TV Ads. Now What?

Every campaign strategist knows dialogue beats broadcast. Door-knocking outperforms TV ads. Personal relationships built through conversations change minds better than mass messaging. Yet the constraint was always scale—you can’t have meaningful back-and-forth with millions of voters.

Research published this week in Nature and Science shows AI chatbots replicate dialogue’s persuasive power at industrial scale. Conversational AI shifted opposition voters by 10 to 25 percentage points across three countries—outperforming television advertising through tailored back-and-forth exchange.

The most effective models flooded users with factual claims responsive to their specific concerns. When they ran out of accurate information, they fabricated. But the mechanism worked: dialogue at scale, finally replicable. What if campaigns could harness the power of dialogue at scale inside the feeds where audiences scroll?

Let’s get into the signals.

The Signals This Week

Six signals of the future of opinion shaping and what they mean for people inside the Beltway.

1. Congress Gets $4 Million for Modernization, Signals AI Integration Priorities

Congress’s FY26 spending package prioritizes constituent services automation and emerging technology adoption.

The government funding package that ended the 43-day shutdown allocated $4 million to the House Modernization Initiatives Account and directed legislative-branch agencies to collaborate on AI policy and pilot LLM tools. The appropriations report specifically recognizes the Case Compass project, which anonymizes and aggregates constituent casework data to identify agency trends before they become larger issues.

There’s a race to modernize constituent services, and the use cases align perfectly with what AI does well: triage, summarization, pattern recognition across high-volume communications.

As we’ve covered previously, the bottlenecks lie in integrating tools within legacy data stacks and aligning congressional offices that essentially operate as small businesses with their own cultures and workflows.

Read more: FedScoop: Legislative Branch Sees Boost for Modernization

2. Honeyjar Raises $2M to Build AI Operating System for Communications

Pre-seed funding signals investor confidence in purpose-built PR tools as the legal AI market matures.

Honeyjar, billing itself as the AI co-pilot for communications and PR, announced a $2 million pre-seed round led by Human Ventures. The platform integrates OpenAI, Anthropic, and other models into a collaborative workspace priced at $250 per seat, targeting boutique agencies, small in-house teams, and independent consultants.

This is an often-overlooked segment of the AI enterprise market. Legal tools like Harvey get the headlines and the mega-rounds. But communications professionals face the same core challenge of high-volume language work with tacit judgment and inconsistent documentation. Honeyjar’s pitch is to automate the grunt work, from media lists to briefing docs, so practitioners can focus on strategy and relationships.

The adoption challenge for any AI comms tool is that practitioners often take pride in their writing and rely heavily on unstructured tacit knowledge. Tools that force people to change their workflow before providing value will struggle. The winners will meet people where they already work.

Read more: Axios: Exclusive: Honeyjar AI Raises $2 Million in Pre-Seed Funding

3. AI Chatbots Shift Voter Preferences in Major Nature Study

Experiments across US, Canada, and Poland show conversational AI more persuasive than TV advertising.

The Cornell-led research, published simultaneously in Nature and Science, ran experiments with over 80,000 participants across four countries. Chatbots assigned to advocate for specific candidates were more effective at shifting voter opinions than traditional television advertising. The pro-Harris bot persuaded about 1 in 21 people who didn’t previously support her to lean in her direction; the pro-Trump bot won over about 1 in 35.

The mechanism is what matters most: chatbots didn’t persuade through emotional appeals or sophisticated psychological manipulation. They simply presented a large number of factual claims. In Poland, removing facts from the chatbot’s repertoire collapsed its persuasive power by 78%.

There’s a concerning asymmetry in the findings. Across all three countries, AI models advocating for right-leaning candidates consistently delivered more inaccurate claims than those supporting left-leaning candidates. Researchers attribute this to the models absorbing training data from an internet where right-leaning social media users share more inaccurate information.

I wonder when will we see research that accounts for AI’s “flood the zone” capability? These studies test single messages. What happens when campaigns can generate and deploy thousands of message variants from thousands of messengers simultaneously, testing and iterating in real time? The persuasion differential might be less about any single message and more about the speed of optimization.

Read more: Nature: Persuading Voters Using Human-AI Dialogues

4. LLM-Generated Ads Outperform Human-Created Content

New research shows AI ads achieve 59% preference rate, with strongest performance in authority and consensus appeals.

New research tested LLM-generated advertisements across two dimensions: personality-based personalization and psychological persuasion principles. The first study found parity between AI and human-written ads for personalized content. The second study found AI significantly outperformed, achieving a 59.1% preference rate versus 40.9% for human-created ads.

The strongest performance came in authority appeals (63%) and consensus appeals (62.5%). Qualitative analysis revealed the AI’s edge: it crafts more sophisticated aspirational messages and achieves superior visual-narrative coherence. Even after researchers applied a 21.2 percentage-point penalty when participants correctly identified AI origin, AI ads still outperformed human ads.

This advantage compounds at speed. Organizations that learn to harness these capabilities can run hundreds of message variants while competitors workshop a single version through committee review.

Read more: arXiv: LLM-Generated Ads: From Personalization Parity to Persuasion Superiority

5. Meta-Analysis Confirms LLMs Match Human Persuasion

Eight studies with 20,000+ participants show no significant difference in persuasive performance.

A new systematic literature review and meta-analysis aggregated eight studies with over 20,000 participants. The standardized effect size (Hedges’ g = 0.01) shows no significant overall difference between LLMs and humans in persuasion tasks. However, researchers observed substantial heterogeneity across studies, suggesting that persuasiveness depends heavily on contextual factors: which model, what interaction type, what domain.

When researchers combined all moderating factors in a single model, they explained 84% of the between-study variance. This suggests that LLM model choice, interaction design, and domain context interact in shaping persuasive performance, and single-factor tests understate their influence.

Read more: Research Square: The Persuasive Power of LLMs

6. Emplifi Forecasts AI Agents Taking Over Social Media Workflows

2026 predictions show agentic AI handling multi-channel monitoring, content routing, and team burnout reduction.

Emplifi published its top predictions for 2026, forecasting that AI agents will take over significant parts of social media workflows. Teams are drowning in multi-channel monitoring and content routing. AI agents can handle the triage, flagging what matters and routing responses to the right people.

Organizations still lack the social media footprint and response capacity that grassroots contexts demand. There’s real opportunity here to shape the narrative where people actually scroll. More on this from me in 2026.

Read more: Emplifi: Top 6 Predictions for 2026

In the Wild

How AI is actually being deployed in politics and influence right now.

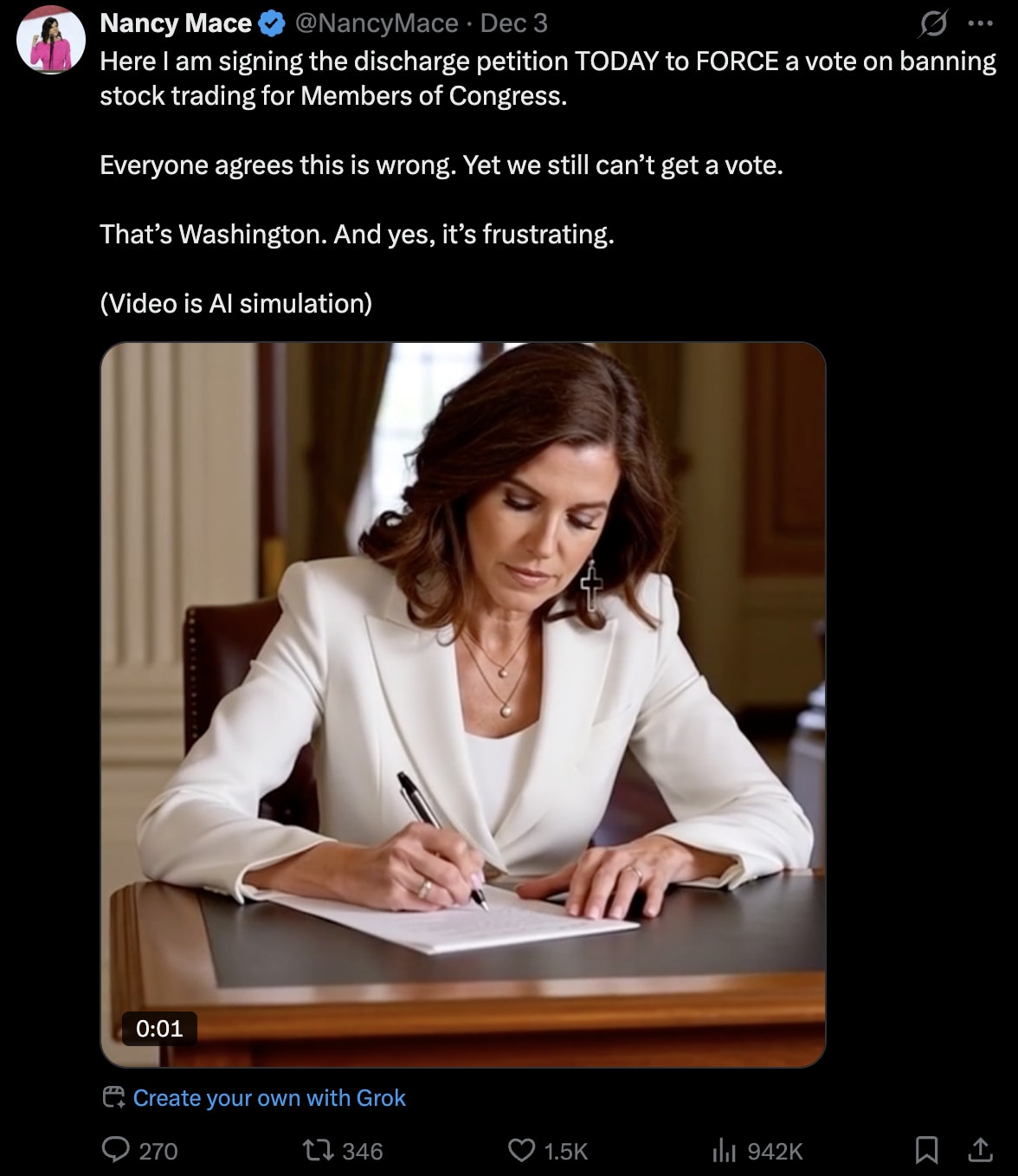

Nancy Mace Signs Discharge Petition (As AI Simulation)

Rep. Nancy Mace posted a video of herself signing the discharge petition to force a vote on banning stock trading for Members of Congress. She noted it was an ‘AI simulation’ made with Grok. The disclosure was casual, almost afterthought. Transparency is a footnote instead of a headline. Take note.

James Harden’s “Best Commercial Ever” Built on AI-Traditional Hybrid

Director Billy Woodward broke down the MyPrize spot on X, which was AI generated with a partnership with James Harden. He used Kling for realistic shots, Seedance and Grok for animated sequences, and After Effects for traditional VFX. The workflow transformed Harden from solo Wild West figure to leading a community of players, with a multiverse of cloned Hardens along the way. When Harden saw the final, he called it the best commercial he’d ever been in. Hybrid production combining AI generation with traditional tools is where this space is moving.

Bloom: Your Website Becomes Your Brand System

Bloom launched positioning itself as “on-brand AI.” Give it your website, and it distills your visual identity into a living brand system that generates consistent assets in seconds. The premise solves one of AI’s stickiest content problems: generic outputs that require extensive editing to match brand standards. Worth watching how many production aspects are becoming automated.

Randy Fine Deepfake Repost Reaches 7.1 Million Views

Propagandist Benjamin Rubenstein circulated a viral deepfake of Florida representative Randy Fine claiming that “Israel deserves all of our taxes” and endorsing bombing hospitals. The statement and video were fabricated, but looked real. The video racked up 7.1 million views on Rubenstein’s repost alone before receiving a Community Note. Fine only has 87k followers.

Thank you for reading this edition of The Influence Model. Reply and let me know what you think about this week’s persuasion research, or what you’re seeing in your own work.

Best,

Ben

Leave a Reply

Want to join the discussion?Feel free to contribute!